Have you ever wondered why despite the flood of talent and competitive salaries, your team still struggles to onboard truly effective machine learning engineers? The truth is uncomfortable: conventional hiring methods are often ill-suited for evaluating the complex skills and mindset required for ML roles. Relying on resume keywords, generic coding tests, or even broad interviews without a clear rubric results in costly mis-hires, slow team progress, and missed strategic opportunities.

If you want to hire ML developers who not only bring coding expertise but also apply deep domain understanding and impact-driven problem solving, it’s time to rethink hiring through the lens of scorecards. Yet, creating a scorecard that genuinely captures what matters in ML engineering is easier said than done. Many hiring managers lean on outdated templates or generic competency frameworks that don’t speak to the unique demands of the role.

This post challenges you to discard assumptions about what a “good” hire looks like and adopt a practical, research-backed, and nuanced checklist that unlocks clarity, consistency, and fairness in your hiring decisions. If you are looking to hire dedicated ML developers to scale existing teams, a well-crafted scorecard can be your secret weapon.

Why traditional hiring methods fail for ML roles

Hiring an ML engineer is fundamentally different from other software engineering roles. The technical dimensions are more interdisciplinary, involving data understanding, algorithmic insight, and iterative experimentation. Yet, typical hiring pipelines often resemble those for general software developers:

- Overemphasizing coding quizzes or algorithm puzzles that don’t mimic real ML problems. While coding skills are necessary, ML engineers must also think critically about model performance, data quality, and deployment challenges.

- Surface-level interviews that don’t probe for ML domain expertise or product impact. You might have brilliant programmers who have never deployed a real-world model or understood bias and fairness implications.

- Ignoring behavioral assessments related to collaboration, curiosity, and continuous learning. ML is an evolving field where engineers must adapt quickly and communicate complex concepts across teams.

The result is ambiguous assessment criteria leading to inconsistent feedback, unconscious biases, and missed ability to predict future success.

How scorecards bring clarity and objectivity to hiring ML engineers

A scorecard is more than a checklist. It is a strategic tool that aligns your interviewers on what skills and traits matter, how to measure them, and what an ideal candidate profile looks like. According to renowned hiring expert Laszlo Bock, structured interviews and scorecards can improve hiring decisions by up to 24 percent.

Scorecards replace guesswork with data-driven clarity. They prevent “gut feeling” bias by requiring concrete examples from candidates and consistent scoring rubrics. For ML roles, they help break down complex skills into observable actions and measurable outcomes, ensuring you evaluate technical excellence, domain-specific knowledge, and soft skills in equal measure.

What to include and avoid in an ML engineer scorecard: A detailed checklist

Below is a checklist designed to help you build or refine scorecards to effectively hire ML developers with precision.

Inclusions

- Technical skills specific to machine learning

- Proven ability to design, train, and optimize ML models

- Experience with popular frameworks like TensorFlow, PyTorch, or scikit-learn

- Familiarity with data preprocessing, feature engineering, and model evaluation metrics

- Understanding of model deployment at scale and performance monitoring

- Domain and problem-solving expertise

- Capability to translate ambiguous business problems into ML solutions

- Demonstrated understanding of data distribution, bias, and ethical considerations

- Ability to articulate trade-offs in model accuracy, complexity, and interpretability

- Coding and software engineering best practices

- Clean coding habits with documentation and testing focused on ML pipelines

- Version control experience and collaborative workflows (e.g., Git, code reviews)

- Impact and project metrics

- Clarity on past projects’ outcomes such as improved KPIs, model precision/recall, or runtime reduction

- Evidence of iterative experimentation and data-driven improvements

- Behavioral traits and cultural fit

- Curiosity towards emerging ML trends and continuous learning mindset

- Communication skills to explain complex technical topics to non-experts

- Team collaboration and receptiveness to feedback

Avoid

- Generic coding tests that do not simulate ML challenges such as pure algorithm puzzles unrelated to model building or data wrangling.

- Vague, unstructured interview questions that invite broad opinions rather than specific examples.

- Overreliance on academic credentials or certifications without assessing real-world application.

- Unscored or subjective assessments that lump all criteria together without weight or rubric.

Practical ML engineer scorecard template employers can use or adapt

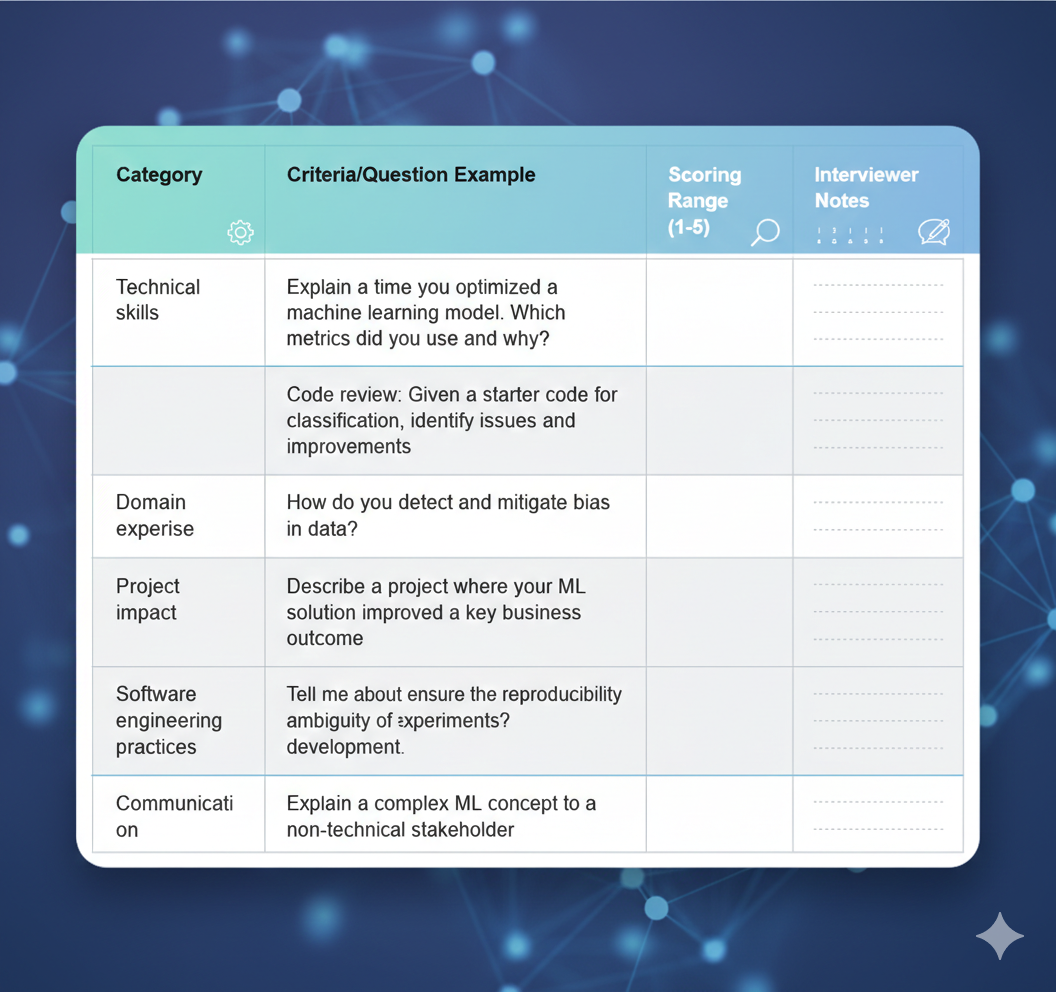

You can replicate this simple yet comprehensive scorecard for your interview panel to standardize evaluation

This template encourages interviewers to gather evidence-based responses anchored on your organization’s priorities.

Final thoughts

Employers who want to hire dedicated machine learning developer talent must stop treating ML hiring like traditional engineering hiring. Incorporating strategic scorecards grounded in ML-specific technical, behavioral, and impact criteria dramatically improves your chances of selecting candidates who will drive real value.

By being clear on what you want to assess and avoiding common pitfalls, your hiring process can evolve from arbitrary to data-informed and from guesswork to confident decisions.

If you are ready to refine your methods and hire ML developers capable of propelling AI initiatives forward, adopting and customizing scorecards is a necessary leap forward.

Employers who embrace this approach will better identify, attract, and retain top-tier ML talent in an increasingly competitive market.

Want to streamline your ML workflows? Check out our guide to building clean, scalable pipelines here https://www.calyptus.co/blog/how-to-build-clean-scalable-ml-pipelines